Emoticon

Concept Creation & Brand Identity

Exploring a social experiment through gestures and movement. An innovative approach that translates emotions into animated avatars, enhancing communication and self-expression in the virtual world.

Services

- Concept creation

- User research

- Technical implementation

- Brand identity

- Illustration

Year

- 2020

About the project

While technology has revolutionized the way we connect, it often overlooks the emotional aspect of our interactions. This study aims to bridge that gap by exploring how body movement can enable more expressive and emotionally rich communication in digital spaces.

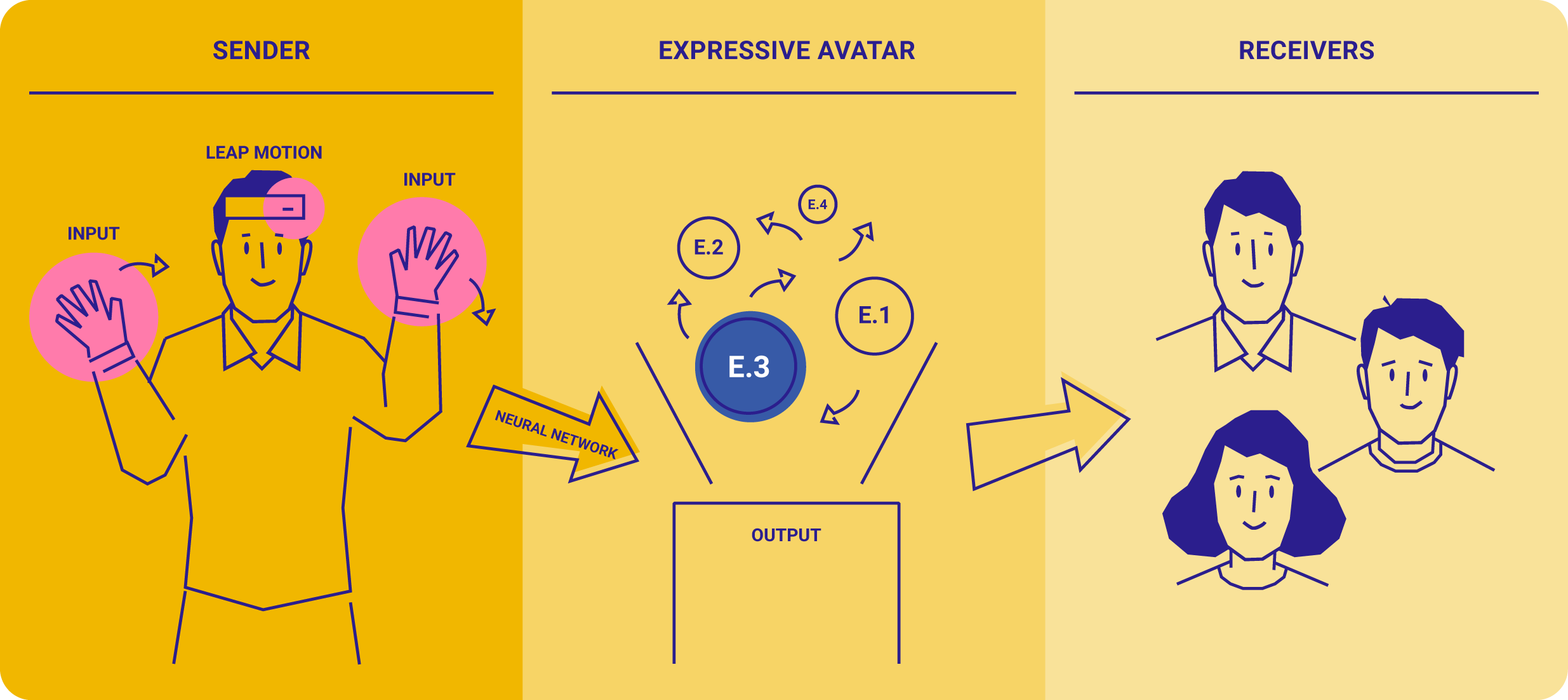

The main challenge was to create a system that translates upper-body gestures into emotional avatars to improve communication. Using Leap Motion for gesture recognition and incorporating universal communication principles and psychological studies on emotional expression, the prototype demonstrates how technology can enhance emotional connection in virtual spaces. Additionally, branding and promotional assets were developed to engage the audience and encourage participation in the experiment.

"In the end, technology has only helped us become more emotionally lazy and withdrawn."

Sherry Turkle, "Alone Together"

Researching Emotion and Gesture

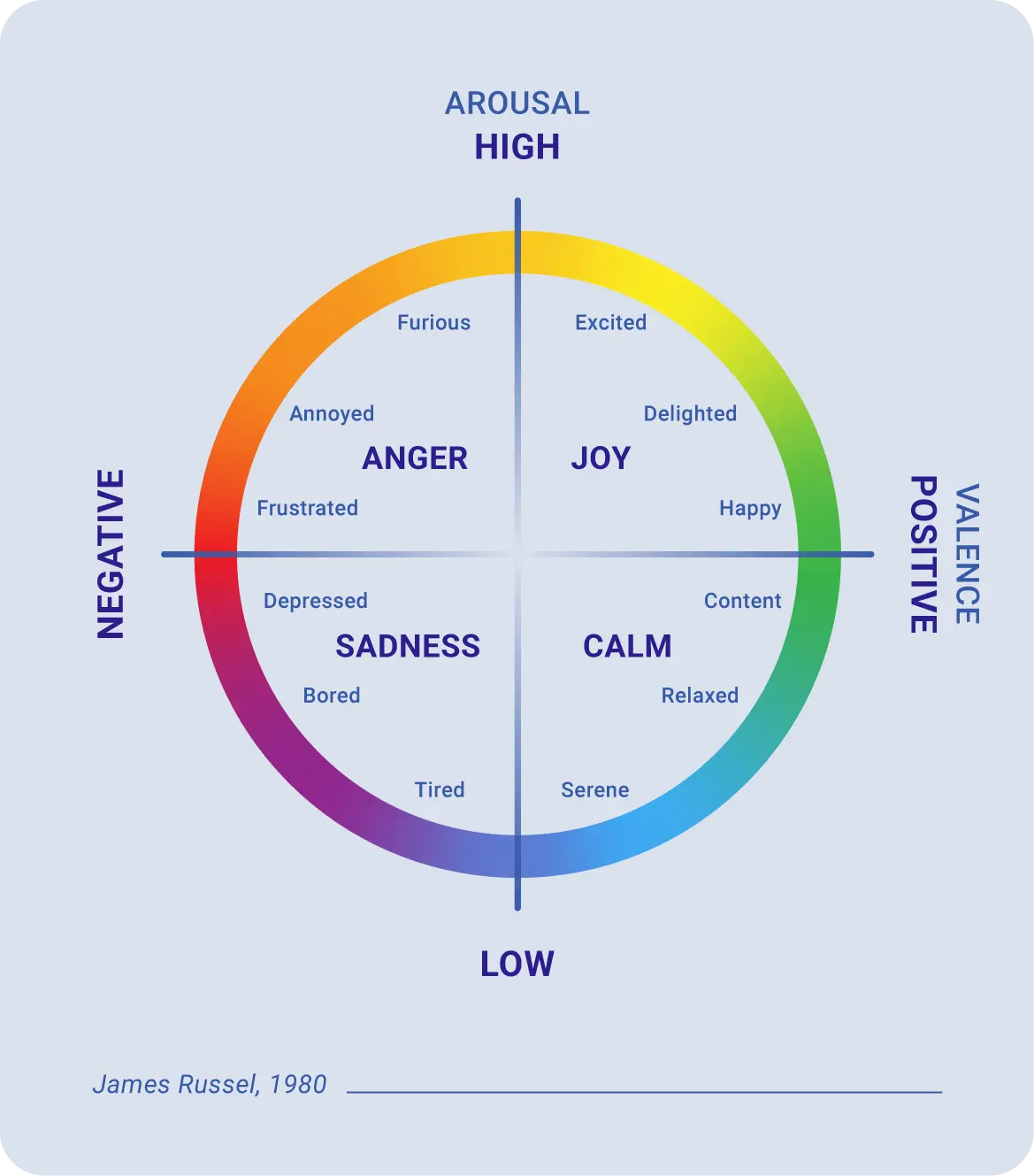

Communication is the exchange of a message between a sender and a receiver, with every message carrying both content and feeling. This research began by examining human emotions through the lens of energy and valence. Due to our experimental approach, the work focused on the four main quadrants of emotion: Joy, Sadness, Anger, and Calm. The next step was to look into psychology and body language to map the common gestures associated with these emotions.

Developing the Recognition System

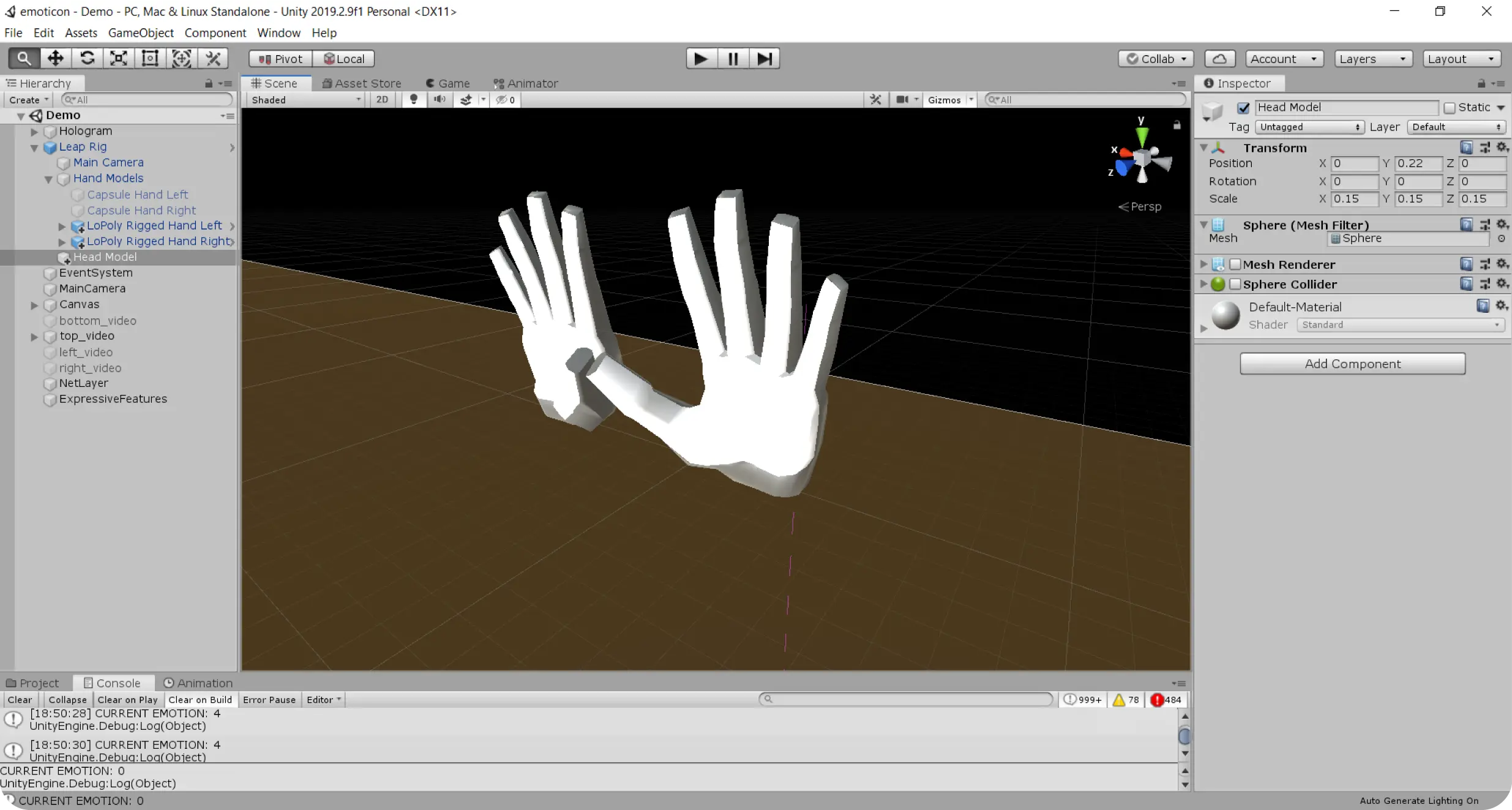

The first step in using the Leap Motion was determining the optimal placement and support for accurate hand tracking. Initial tests involved attaching the device to the user’s chest, aiming for a more comfortable and easily interchangeable setup. However, it soon became clear that the best way to achieve the intended results was to mount it on the user's head, ensuring a wider and more reliable field of view.

With the Leap Motion placement finalized, the focus shifted to developing the Deep Neural Network. After researching available resources, we adapted a GitHub library and created an interface, which analyzed four key movement parameters: symmetry, trajectory spread, spatial extent, and energy. The network was trained using sequences of repeated gestures to ensure accuracy while minimizing detection noise. Once trained, the system would only validate an emotion if its confidence level exceeded 50%, triggering the corresponding visual and audio response.

Designing Expressive Avatars

The avatars design focused on creating a universally adaptable form that could effectively convey emotions. A flame-like shape with a simple face was chosen to maintain an approachable feel. Research on color psychology, shapes, and cultural perceptions guided the design choices. The avatars were color-coded based on the arousal circle of color. To enhance emotional impact, additional elements like waves were used to differentiate each emotion, balancing smooth, friendly movements with sharper, more intense features where needed. Facial expressions were carefully crafted based on established studies, ensuring clear, universally recognizable emotions through subtle variations in eyes and mouth orientation.

The avatar’s audio was composed based on cross-cultural studies on music and emotion. Key audio descriptors—such as melody, pitch variation, tempo, rhythm complexity, and amplitude—were used to align with universal emotional responses. These principles ensured that each avatar's sound design reinforced its visual representation.

Bringing the System to Life

With all system components developed, we integrated them into Unity. Since the final output was a holographic display, we used Unity's canvas to render views from four cameras, creating the necessary 3D visualization. Finally, an algorithm was implemented to trigger videos and sounds based on the Deep Neural Network’s detected emotions, with each emotion consisting of three animations: fade-in, loop, and fade-out.

Crafting the Brand and User Experience

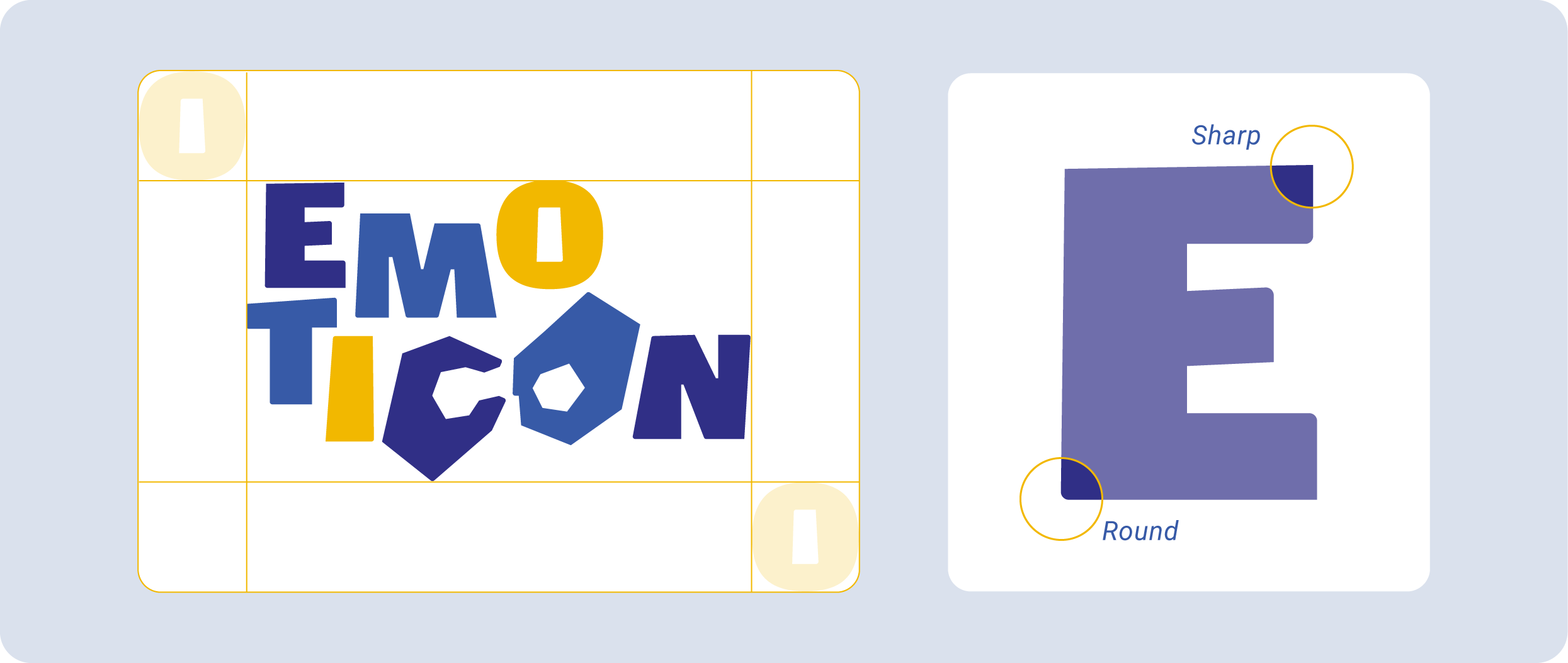

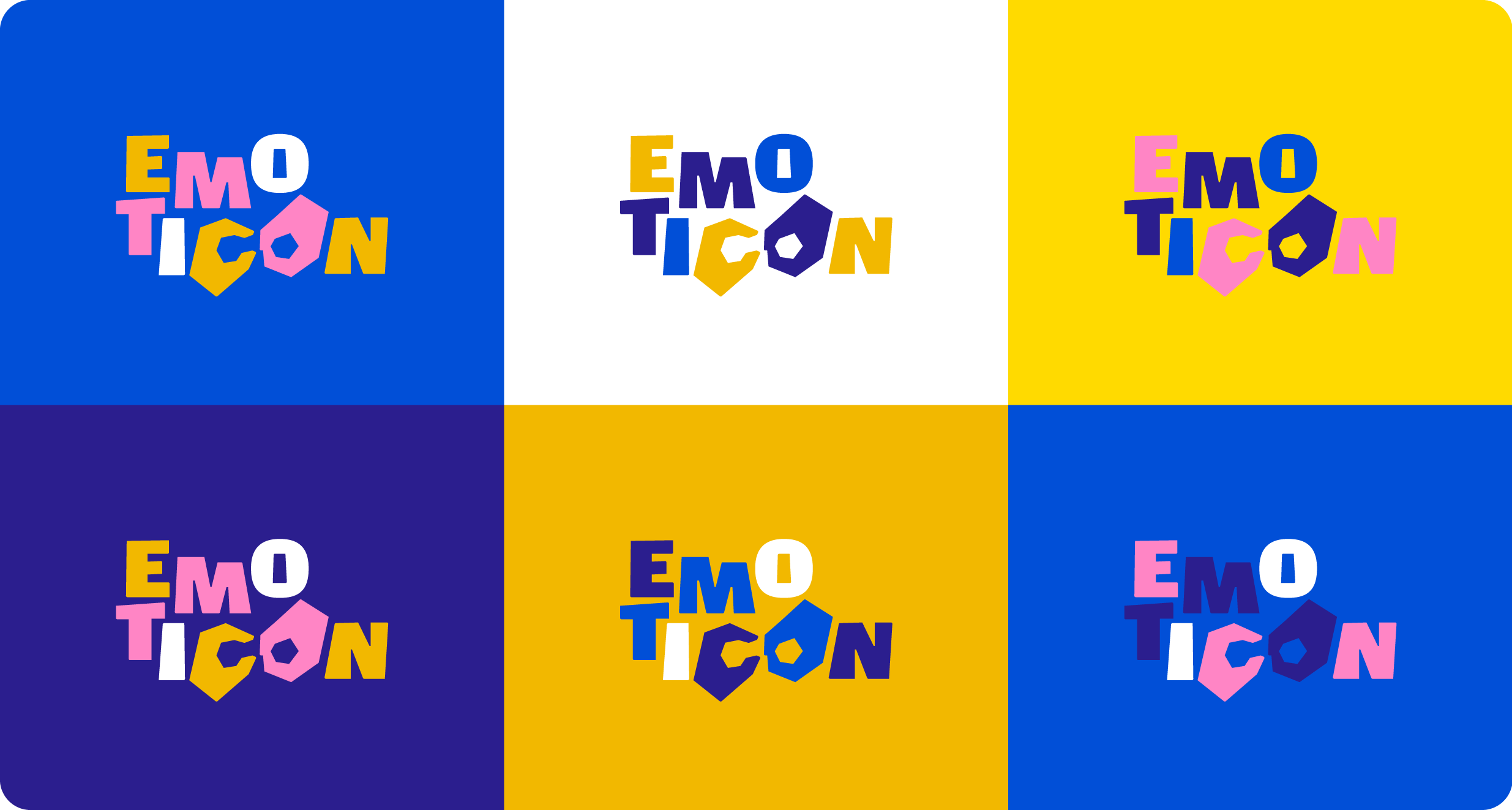

The branding process was swift and intentional, aimed at attracting users to our booth and enhancing their engagement with the experience. We began by designing a playful, experimental logo that visually incorporated hands. Inspired in part by the latest Inside Out movie, we customized the typography by adjusting the shapes and positioning of the letters. The combination of rounded and sharp corners symbolized the contrast between extreme and subtle emotions. For the color palette, we explored multiple combinations, ultimately selecting a balanced mix of warm and cool tones to reflect the varying energy and valence of emotions.

To introduce our concept, we created a flyer featuring an inspiring quote that encouraged readers to reflect on human behavior and emotions. It also included a concise pitch explaining our project.

Although the experience revolves around a Natural User Interface (NUI), human emotional expression is largely unconscious. To help users become more aware of their own behaviors before interacting with the system, we gamified the introduction phase. Users first picked a card depicting an emotion alongside insights into its typical expressions. This step primed them for the main interaction.

Once equipped with the headband-integrated Leap Motion sensor, users performed various gestures, seeing and hearing their movements translated in real time into the avatar. The holographic display was chosen not only for its wider visibility but also to enhance the “wow” factor and playful nature of the experience.

The Value

Incorporating insights from psychology and sociology into this project enhances the effectiveness of digital communication by leveraging natural human behaviors. By focusing on upper-body movements to express emotions, this research bridges a gap in computer science, contributing to the evolution of more intuitive and emotionally responsive interfaces.

This approach supports the development of Natural User Interfaces (NUI), ensuring that future technologies align more closely with human interaction patterns, making digital experiences more natural, accessible, and emotionally engaging.